Mathematics is the poetry of logical ideas. Most of the time in Mathematics, we cannot just claim that a statement is true. We have to pile up a sequence of logical deductions, one after another, in order to form a “proof” that demonstrates the validity (or falsehood) of the statement.

The method of “proving” probably originated from the most successful textbook in human history – The Elements by Euclid from Ancient Greek. In its 13 books, Euclid listed out a number of definitions and commonly accepted theorems – known as Axioms. Then, from these few axioms, he proved many of the fundamental theorems in geometry, algebra, and number theory, part of which have now became the core of secondary mathematics curriculum. (Apologies if I reminded you of any unpleasant memories you have)

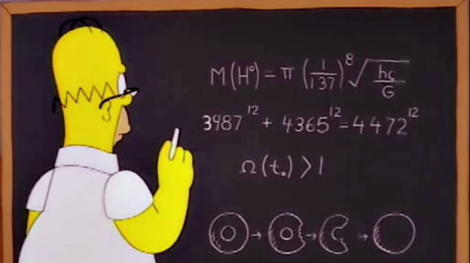

Since then, mathematics have never been short of interesting questions. One example is the famous Fermat’s Last Theorem. The conjecture looks strikingly simple and understandable to everyone. It also seemed to be easily provable, as Fermat claimed that he had already had a proof but there wasn’t enough margin in the book for him to write it out.

As it turns out, of course there wasn’t enough space for the proof (if Fermat had one). After 358 years (1637-1995), it was finally proved by Andrews Wiles in his 150-pages long proof, which was merely the finally kick of the long way towards the goal. Hundreds of mathematicians have spent years on the problem, proving partial results or developing related techniques.

Talking about interesting and influential problems, how can we not mention David Hilbert? He proposed 10 questions in the Paris conference in 1990, and later on added 13 more. These 23 problems, now known as Hilbert problems, set out the roadmap for modern mathematics. Some of them remained unresolved, such as the notorious 8th-Riemann Hypothesis. A prize of 1 million usd will be granted to the first person who proved or disproved the hypothesis.

In the age of computers where much of our work has been simplified, some may wonder if someday computers will take over the job of mathematicians in proving theorems. After all, proving theorems is no more than applying logical rules repeatedly, and computers are extremely fast in trial-and-error.

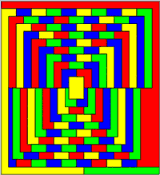

In fact, this is not science fiction or prediction, as it has already happened. In 1976, the Four Color Theorem was proved by computers. The theorem stated that any two-dimensional map can be colored with merely 4 colors in a way such that adjacent regions have different colors. Computers were used because there were so many cases to verify that, if done by hand, would be hundreds of pages long. Some people are concerned whether a proof not verifiable by human should still be counted as a “proof”.

Despite the controversy, the above demonstrated the capability of computers in proving theorems. It seems possible, given enough time, for a fast-enough computer to enumerate all the axioms in mathematics and apply them repeatedly to prove (or disprove) anything, right?

Surprisingly, the answer is no. There exists mathematical statements that can neither be proved, nor disproved. This is known as the Godel’s first incompleteness theorem.

Godel’s First Incompleteness Theorem

Just as mentioned above, a system (e.g. mathematics) consists of a number of axioms and logical rules. We say the system is “complete” if any statement in the system can either be proved, or disproved, based on those rules and axioms.

The system should be free of contradictions. That is, if something has been proved, there should never be a way to disprove it, otherwise it will be both true and false. We call the system “consistent” for not having such contradiction.

The Incompleteness Theorem states that for any system, it cannot be both “complete” and “consistent”. If contradiction is not allowed, there must exist statement that can neither be proved nor disproved, i.e. undecidable. Note that the system here need not be mathematics, it can be any logical system that is sophisticated enough.

The proof by Godel was elegant, but not easy to understand. The first idea is that we don’t really care about language or real-life implications. What we are interested is the process of deduction, so we can replace the elements and symbols of the system with numbers.

A statement is a sequence of symbols, therefore it can be transformed into a sequence of numbers. Furthermore, using prime numbers, we can transform a sequence of numbers into a unique number (a.k.a Godel number)

Here I shall give a very brief outline of the proof:

- First, assign numbers to the basic symbols, such as “not”, “or”, “for all”, “exists”, “zero”, “(“, “)” and variable “x”

- Express concepts one by one as formulae built from the basic symbols, from more concrete ones such as “divisible” and “prime number”, to more general concepts such as “substitute”, “equivalent” and “immediate consequence”. In the end we have a symbolic representation of the concept “provable”.

- Consider a function with one variable. We can transform it into a sequence of symbols, then transform into its Godel number. We can substitute the Godel number back into the function to create a self-referential statement.

- Statement “This sentence is not provable within the system” can be expressed with the method in step 3. If the statement is provable, the statement becomes false, i.e. a false statement is provable in the system, hence inconsistent. Similarly, disproving the statement causes inconsistency. Therefore, it cannot be proved or disproved without causing contradiction.

(For interested readers, a simplified version of Godel’s proof can be found at http://www.research.ibm.com/people/h/hirzel/papers/canon00-goedel.pdf)

As a result, no matter how the axioms are set, either the system is not “complete” (i.e. exists statements not decidable), or it is not consistent (i.e. exists statements both proved and disproved). It is like trying to cover an 8×8 chessboard with L-shaped tiles – either there are uncovered grids, or some tiles overlap.

Then there is Godel’s Second Incompleteness Theorem, which is even more interesting. It states that if the system can prove its own consistency, then it is inconsistent. In other words, “If you claim to be always right, you must be wrong!”

The Second Incompleteness Theorem in part solved the 2nd Hilbert’s problem, which asks for a proof of the consistency of the mathematical system (specifically the axioms of arithmetic). Such a proof cannot exist within the system itself.

So, in the current mathematical system, where does the “incompleteness” lie? One example would be the “This statement is not provable” as mentioned in the proof above. Is there any other example that is interesting?

Some Infinities Are Bigger Than Others

Everyone should be familiar with the concept of “infinity”, but do you know that some “infinity” is strictly greater than other “infinity”?

For example, how many positive integers are there? Infinitely many. How about positive integers that are even? Also infinitely many. Intuition may lead us to think that the first infinity is greater than the second, but actually they have the same size, because we can pair up a positive integer with an even number: (1,2), (2,4), (3,6), etc. We say that even numbers are Countable, because we can list them out in a sequence: 2,4,6,8,10,… where each element appears exactly once. It is easy to see, the size of any Countable set (e.g prime numbers, rational numbers) is equal to the size of positive integers. Mathematicians have a fancy term for this kind of infinity, called Aleph-null ().

What about the size real numbers? Is it equal to the “countable” infinity? It can be easily shown that real numbers are Uncountable. There are more real numbers than integers can count.

Suppose you have an ingenious way to arrange all positive real numbers less than 1 into a sequence. It is always to construct a new real number less than 1, such that it is not in your sequence, by taking the first decimal from the first number, +1, then use it as the first decimal of the constructed number, and so on. The constructed number must differ from the nth number in the sequence at the nth decimal place, hence it is totally new.

There is another fancy term for this larger infinity (size of real numbers) called Beth-one (). We have showed that Beth-one > Aleph-null, but is there anything between these two infinities? This question is precisely the 1st Hilbert problem. In 1963, Paul Cohen gave an answer to this.

His answer? It can neither be proved nor disproved within the commonly-accepted mathematical system (ZFC set theory). It shall remain as a hypothesis (Continuum Hypothesis). It is your choice to add the hypothesis, or its opposite, as a new axiom.

The continuum hypothesis has no effect to common people like us, as our intuition has nothing to say about whether it seems to be true or not. However, it remains as an interesting evidence of the Incompleteness of our mathematical system.

Implications on artificial intelligence

In the early years of development of artificial intelligence, human cognition was thought to be rule-based manipulation of symbols. For example, when we speak, we first organize the components of the idea in a specific order, then find the suitable words, finally express it in accordance with grammatical rules.

According to their theory, we have formed a number of rules in our mind such that when we receive a stimulus, our mind go through those rules to find out relevant pieces of memory and idea. The process of thinking is manipulation of abstract ideas, separated from the physical world. Even emotions can be described by a series of logical deductions. Therefore, if we can write a sophisticated-enough algorithm on a computer, which include all the logical rules of human thinking, it can “think” like human, and act like human. This approach to artificial intelligence was called GOFAI (Good Old Fashioned AI).

However, Godel’s Incompleteness Theorems showed that any formal system, if consistent, must be incomplete. Hence, for a GOFAI, there must be a Godel sentence which is true but the system cannot prove, while human (mathematicians, you, me) can see the truth in that sentence. It leads to the conclusion that GOFAI can never match against human mind; there is something fundamentally different between the two.

How does our minds work then? One class of theories suggest that instead of step-by-step deductions, we think with heuristics to get answers quick and correct most of the time. Consistency is not guaranteed, but this is acceptable because we allow mistakes from even the most intelligent people. Another class of theories points out that human mind is a dynamic system rather than static. When we learn, we don’t just learn about new facts and ideas, we change our deduction algorithm in the process. Therefore we can defeat the limitations of Incompleteness of systems. Both class of theories led to major developments in AI after GOFAI.